Is AI Really Ready: Jumping the Gun or a Leap Forward?

04 Jun, 20248 minutesIn the relentless pursuit of staying ahead of the competition, organisations are eager to em...

In the relentless pursuit of staying ahead of the competition, organisations are eager to embrace the transformative potential of Artificial Intelligence (AI). That makes sense – it’s the buzzword of the moment and those who can master the tech early will surely be the ones who will reap the rewards right? It’s a prevailing theory we see repeated time and time with slight variations in context and sectors.

“AI is not going to take your job. The person who uses AI well might take your job.” - Ted Sarandos, MD of Netflix

In the race to adopt AI and be the leader in the field, we've inevitably seen some stumbles though. A recent news story about Google's new AI overview feature encountering problems got us thinking: are there times when companies rush AI integration a bit too quickly?

In this blog we'll outline:

- AI's Unfulfilled Promise

- Pitfalls of AI hallucination

- AI's unreliable nature

- What we can do to help remedy

- The true potential of AI

AI's Unfulfilled Promise

While AI holds immense promise for automating tasks, enhancing decision-making, and unlocking new possibilities, the google news in particular had our team theorising that in some cases businesses were getting a little ahead of themselves and implementing AI solutions without fully understanding the limitations, leading to underwhelming results and, in some cases, detrimental consequences.

And in today’s digital age where reputation is everything and customers are spoilt for choice – one bad experience can spell disaster. Customers will quickly abandon a brand that delivers a subpar AI interaction, damaging trust and potentially leading to negative online reviews that can cast a long stain that’s hard to clean away.

The Pitfalls of AI Hallucination

One of the most concerning issues with current AI technology is the chance for it to experience "AI hallucination." This phenomenon occurs when chatbots or other AI systems generate seemingly plausible but factually incorrect information, leading users astray.

For instance, a chatbot designed to provide medical advice may confidently prescribe a harmful medication based on inaccurate data.

The basic idea behind this is that chatbots do not inherently know if they are telling the “truth”. They are not a sentient person but rather an AI algorithm smartly designed to amass information and from that deliver an appropriate response. This, of course, makes it prone to errors which can have severe implications, especially in high-stakes applications like healthcare or finance.

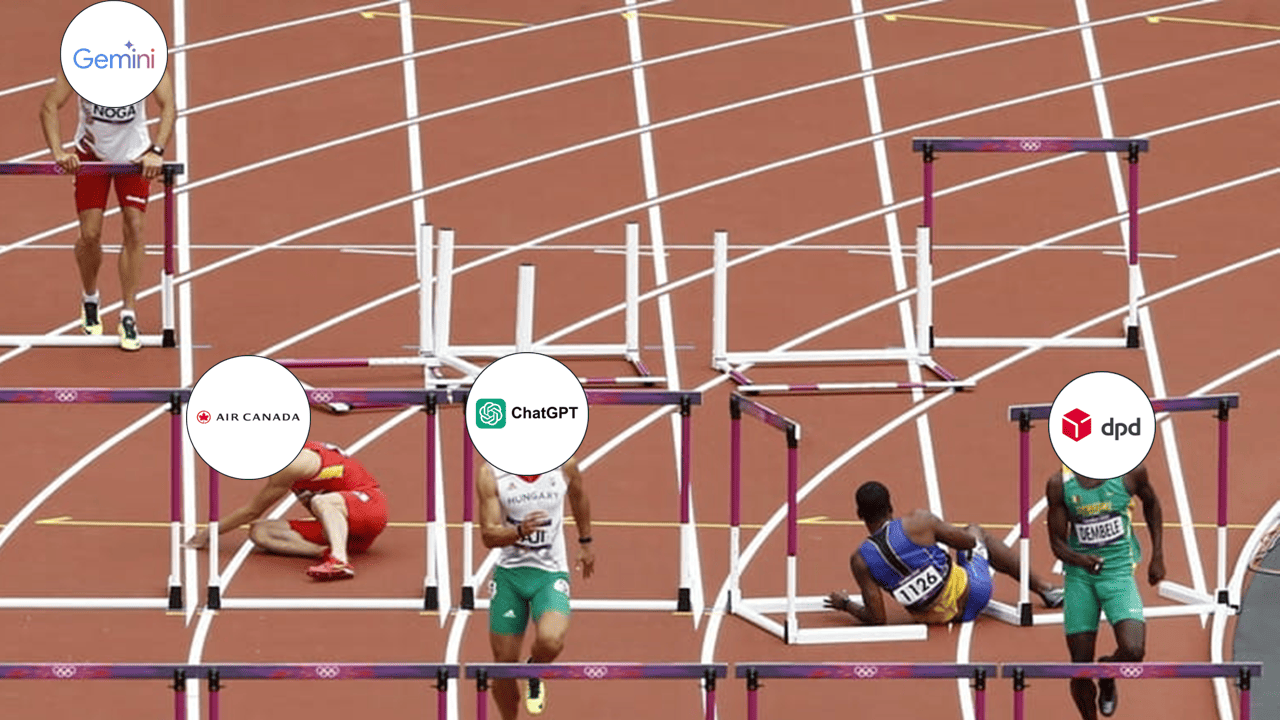

One such example of an AI mishap comes from DPD. The firm was forced to disable part of its online support chatbot earlier in January after it swore at a customer. The news of this incident quickly spread like wildfire across social media, with one example post being seen over 800,000 times in 24 hours.

The backlash from this event forced DPD to take immediate action. They not only disabled the offending part of the chatbot but also issued public apologies and promised a thorough investigation to prevent similar occurrences in the future. This response aimed to address customer concerns and restore trust in their service. However, the incident served as a reminder of the potential pitfalls of relying heavily on automated systems without robust safeguards.

Another example can be seen in Air Canada’s recent PR mishap. A customer trying to book a flight with Canada's leading airline contacted their chatbot to inquire about bereavement fares. He wanted to know what qualified for the discount and if he could get a refund if he already booked a ticket.

The chatbot incorrectly told him he could apply for a retroactive refund within 90 days. However, after following the chatbot's instructions, the customer was informed bereavement fares didn't apply to past travel and was directed to the airline's website for more information.

After taking issue with how Air Canada had chosen to solve the issue, the customer proceeded to sue the airline for fare difference to have the airline respond by stating the chatbot was a “separate legal entity” and thus was responsible for its actions.

All this underscores the idea that AI hallucination isn't just about misinformation; it raises critical issues about trust, accountability, and the limitations of AI technology.

AI's Unreliable Nature

AI systems are only as reliable as the data they are trained on. If the training data is biased or incomplete, the AI will inherit and perpetuate those biases. This can lead to discriminatory or unfair outcomes, undermining the very principles AI is meant to uphold – “quality information and a better standard for everyone”

Moreover, AI systems often lack the common sense and critical thinking skills possessed by humans. This makes them vulnerable to manipulation and exploitation by malicious actors or even well-intentioned users who may not fully grasp the system's limitations.

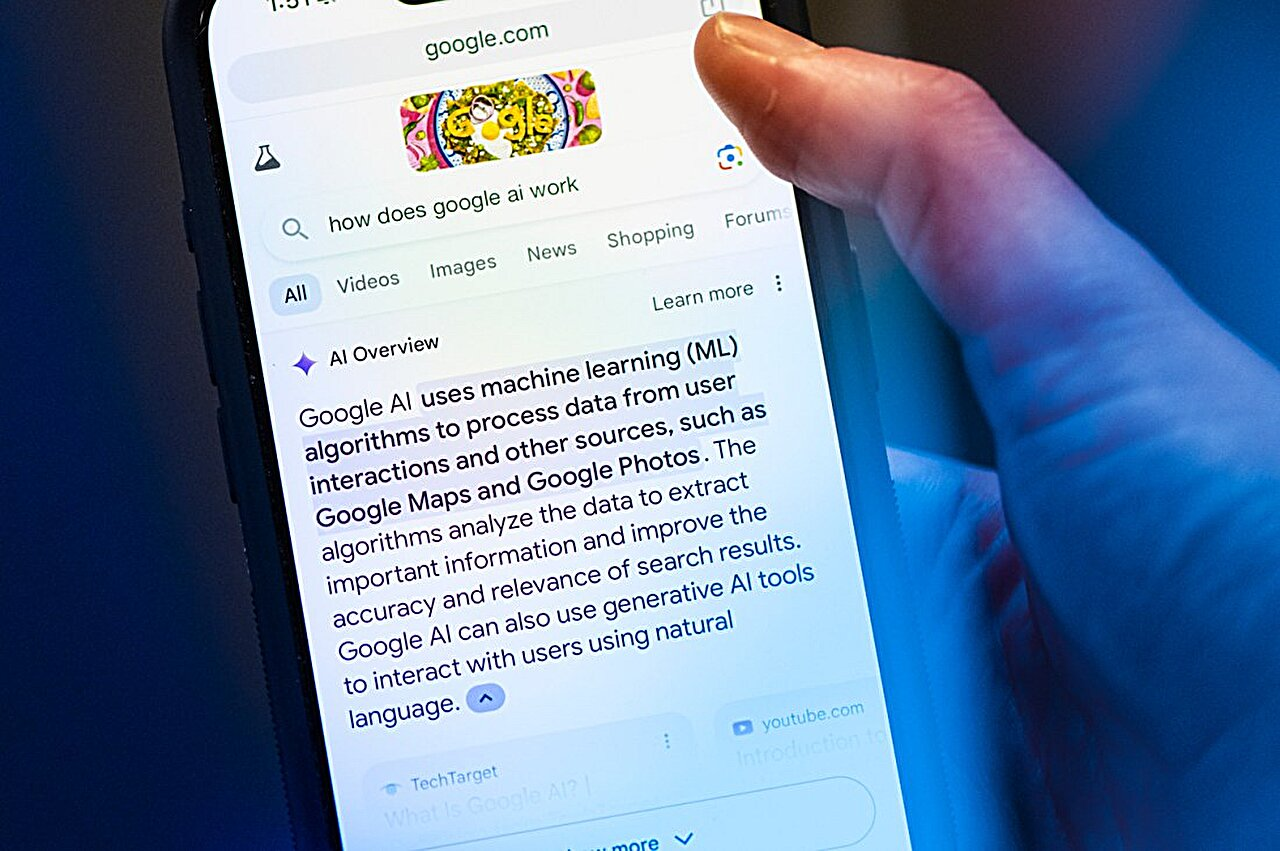

Perhaps our biggest example of potentially serving AI before it's fully developed comes from Google, a veteran in the field of AI. They recently introduced "AI Overviews" as the default for US search results, with plans for global rollout. However, concerns have surfaced regarding the accuracy and safety of these AI-generated summaries, with some instances containing misinformation or potentially harmful suggestions.

In response to these concerns, Google issued a statement acknowledging the issue:

“The vast majority of AI Overviews provide high quality information, with links to dig deeper on the web. Many of the examples we’ve seen have been uncommon queries, and we’ve also seen examples that were doctored or that we couldn’t reproduce.

We conducted extensive testing before launching this new experience, and as with other features we’ve launched in Search, we appreciate the feedback. We’re taking swift action where appropriate under our content policies, and using these examples to develop broader improvements to our systems, some of which have already started to roll out”

Google's cautious approach to releasing AI features stems from its core business model. Unlike many tech companies, Google relies heavily on users trusting its search engine to provide accurate information. Rushing to release AI features before they are fully developed could harm that trust.

This highlights a tough decision for Google: prioritise reliability and potentially lose ground on competitors (Microsoft, OpenAI etc.) or integrate potentially risky AI and risk losing their search dominance.

This recent incident with inaccurate AI Overviews exemplifies Google's concerns. While the errors were uncommon, they highlight the need for further development before a wider release.

“But some odd, inaccurate or unhelpful AI Overviews certainly did show up. And while these were generally for queries that people don’t commonly do, it highlighted some specific areas that we needed to improve.” - Statement by Liz Reid, Google’s head of search

What We Can Do to Help Remedy

So, are all these examples just bumps in the road just signs of growing pains for AI adoption? Even if so, it raises a crucial question: Is AI truly mature enough for widespread adoption? The answer, unsurprisingly, is likely nuanced and lands somewhere in the middle.

Significant change always brings challenges. However, this underscores the importance of caution for organisations implementing AI solutions. It shouldn't be a case of "everyone's doing it, so we should too." Careful consideration is vital – businesses need to evaluate their specific needs and determine if AI, in its current state, is the right fit for their business model.

It may very well be that they are, and the current AI systems are a perfect fit that work as intended – however we believe it is essential organisations conduct the following:

Thoroughly assess the readiness of the organisation and the AI solution: Conduct a comprehensive evaluation of the organisation's AI maturity, data quality, and available resources. Ensure the AI solution aligns with the organisation's goals and is fit for purpose.

Invest in human capital: Recognise that AI is not a replacement for human expertise but a tool to augment it. A well build AI team is one that can help mitigate risks and set up the business for success. Skipping steps isn't an option. To be ahead of the curve you need to hire people ahead of the curve

Prioritise transparency and accountability: Establish clear guidelines for AI implementation, including ethical considerations and mechanisms for addressing errors or misuse. Foster a culture of transparency and accountability to build trust with stakeholders.

Cost vs. Benefit: Implementing AI can be expensive. Does the potential gain outweigh the investment for your specific needs?

Current Workflows: How well does AI integrate with your existing processes? Don't force a square peg into a round hole.

The True Potential of AI

When implemented thoughtfully and responsibly, AI can unlock significant benefits for organisations. By automating mundane tasks, AI frees up human workers to focus on more strategic and creative endeavours. It can also provide valuable insights, identify patterns, and predict outcomes, empowering organisations to make informed decisions.

However, achieving the full potential of AI requires a measured approach, one that balances innovation with caution. By addressing the current limitations and ensuring responsible implementation, organisations can harness the transformative power of AI while avoiding its risks.

This measured approach hinges on recruiting the right staff. A strong team with expertise in AI, data analysis, and human-centred design is crucial to guide development, address limitations, and ensure responsible integration into the workforce. This is where MRJ excels. With a rich history of scouting the best tech talent, we are uniquely positioned to help organisations build the robust teams necessary to navigate the complexities of AI implementation and maximise its potential. For anyone curious, feel free to contact us for an informal chat.

Let’s wrap up whether we’re jumping the gun with AI Implementation

The question of whether organisations are jumping the gun on AI implementation is complex. While AI holds immense promise, its current limitations and the risk of unintended consequences demand a cautious approach. By prioritising readiness, transparency, and human capital investment, organisations can harness the true potential of AI while avoiding the pitfalls. BUT that can only be effective if done right with a good, measured strategy in place.

For those in the tech industry who may be considering moving into the AI field, why not check out our blog on How AI in Software Development Can Transform Your Career?